In Part 1 of this blog series, I proposed that closed-loop orchestration (CLO) is a data management problem. Data extends beyond your medical history, social media, and other PII in the digital world. Configurations, inventories, and monitoring systems are based on managing, interpreting, and actioning data. CLO is a workflow about manipulating the data around the target environments. Even the policies and constraints need to be expressed as data.

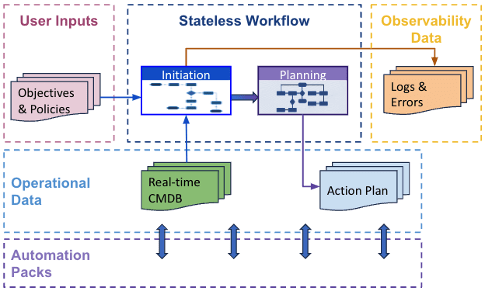

The figure from the first blog is shown below again as a reminder of our framework. We have identified four key data types: user inputs, operational data, observability data, and Automation Packs. The data support the atomic actions of the workflow, which we grouped into five steps: initiation, planning, execution, confirmation, and updates. Automation packs provide API integration into the components of the infrastructure. Remember, these workflows are stateless without this data.

Figure 1 – Generic Data Sets Required to Orchestrate a Workflow

This blog will dig into each data type and how it supports the workflow. Our focus is on the initiation phase and how the data gathered during this phase provides a foundation for planning the execution models.

The initiation phase is about gathering the data needed to conduct the workflow. The data must be codified with specific data points, as machines are not good at figuring out intent. Data can be text, tables, tuples, or other forms. Data must be accessible by each element, and there should only be one source of truth for the workflow to eliminate potential conflicts.

Once gathered and organized, this information provides the needed information to perform the workflow, update the systems of record, and provide the logs of the actions taken. The data show the impacts of any changes and identify the root causes of any failures.

Figure 2 – Automation APIs

Data not only needs to be managed, but it also needs context. Context is often overlooked when merging data from multiple sources into a single repository. The centralized data mechanism coordinated with the workflow to maintain the data context with respect to the actions and included systems.

The task of gathering this data is like that of advanced analytics. Data currency, completeness, and quality checks to support the workflow’s actions. Essential pieces that the data management system needs to understand are:

The resulting information represents a real-time CMDB specific to the environment with the context needed to accurately model the workflow and assess the impact of its execution.

Critical development criteria for Orchestral.ai’s Composer include data management capabilities. It was designed with the understanding that data management is key to CLO.

Some of these aspects include:

In addition to the system logs, Composer maintains its own logs to provide information concerning the workflow’s actions.

The focus on data management has elevated Composer to one of the top functioning CLO engines on the market

But, it is only the beginning for Orchestral.ai.

As we alluded to earlier, when the data is collected as described, the completeness and coherency enable developing and deploying more advanced workflows using advanced analytics and AI.

And that is just Maestro’s capabilities to provide to the Orchestral suite.

CLOs of today work from predefined logic, static workflows, and simple decision trees. Maestro will provide more optimized processes that are responsive to the observed reactions. It will also be able to anticipate the impacts of change performed and compare results to the expected values. All this helps refine the system models and drives continuous improvement.In short, a digital twin of the environment can be created within the context of the specific environment. But to achieve this, a standard lexicon must be maintained across all sources to ensure the integrity of the data. And it is into that discussion our third installation of this series will venture.

Learn more at: Orchestral.ai