In this post we adopt a slightly different lens through which to “see” and understand IT orchestration understood to be an orderly sequence of automations executed across disparate domains, including servers, networks, security, apps, cloud and more.

As we outlined previously, managing IT systems has become increasingly cumbersome as the infrastructure complexity increases exponentially. The increasing technical complexity inherently increases the data gathered from the IT Infrastructure, which is expected to reach 181 ZBytes by 2025. A significant percentage of this data is monitoring data that the infrastructure generates. The monitoring data acquired is only relevant when it is made context aware by overlaying monitoring data with the previously acquired data of inventory and target end system’s entity relations. Once this is achieved the management of a complex infrastructure is rendered to be a data management issue of the contextual data and monitoring data correlated with each other in real time. The task of efficiently managing the complex IT Infrastructure, viz. configuring or remediating, is reduced to accurately interpreting the combined data gleaned from the infrastructure. Hyperscalers such as Google, Amazon, Facebook, and Twitter of the world have built an army of engineers to not only capture the enormous amount of data appropriately but also harness the value of the data. Good data management practices have led to automation and orchestration becoming essential to maintaining the operations of complex hyperscale infrastructures.

Large and medium Enterprises today are trying to mimic the advanced orchestration systems of hyperscalers in their private clouds to gain the flexibility and agility of the public cloud without the cost or technology lock-in. Dell/EMC, HPE, VMWare, and the other private cloud vendors are racing to build on-premises competitive systems for these clients. But as enterprises looking to use these new task engines for salvation, they need to understand what the public cloud companies figured out a long time ago.

Much has been made about digital transformation in recent years. While IT has a history of hype around processing power, storage form factors, and network capacities, data is the true objective. And this was true long before the advent of machine learning and AI.

Data governs the entirety of IT systems that underpins business processes. All systems operations are based on data – inventory, configuration, performance, administrative data, etc. The management and operations of these environments are nothing more than manipulating this data to achieve new capabilities and capacities.

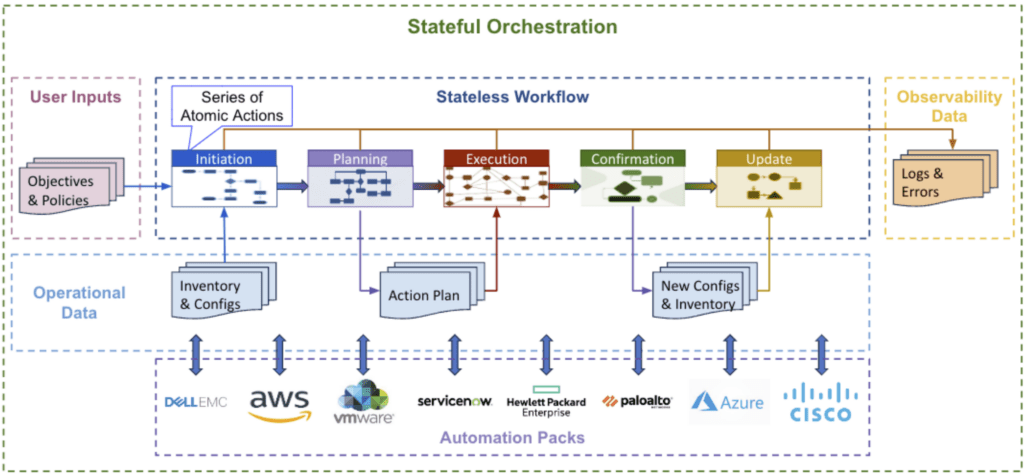

The first step in building an orchestration platform, like any data management system, is to understand the types of data we deal with. There are three main functions:

Stateless Workflow: The codified logic that will achieve the desired result. It combines atomic actions against a specific target end system that collects, transforms, and distributes information. Workflows are codified in the IFTTT structure. The key design objectives include:

The stateless nature means that the logic can be tested outside of a working environment, and the workflow performs as expected without maintaining the state of the target end systems.

Workflow Data: Defines the objectives, constraints, and other data required to perform the desired orchestration. The operational data is what creates the state-full nature of the orchestration and includes:

Operational data must be available to each of the needed workflow elements. Common approaches include:

Automation Packs: The means of communication between the various components. Modern technologies communicate information, configuration, and action through APIs. Technology will have APIs based on the manufacture, domain, and models. These API PACKs have the following characteristics:

The Automation packs allow the logic to maintain independence from the specific technologies.

Below is an illustration of how these three data types come together in an orchestration platform.

Figure 1 – Generic Data Sets Required to Orchestrate a Workflow

Orchestral, a leading provider of closed-loop orchestration, has developed its Symphony platform around these data management principles. To highlight how their approach differs from those that only deploy task engines, let’s walk through an example of the Configuration Drift use case.

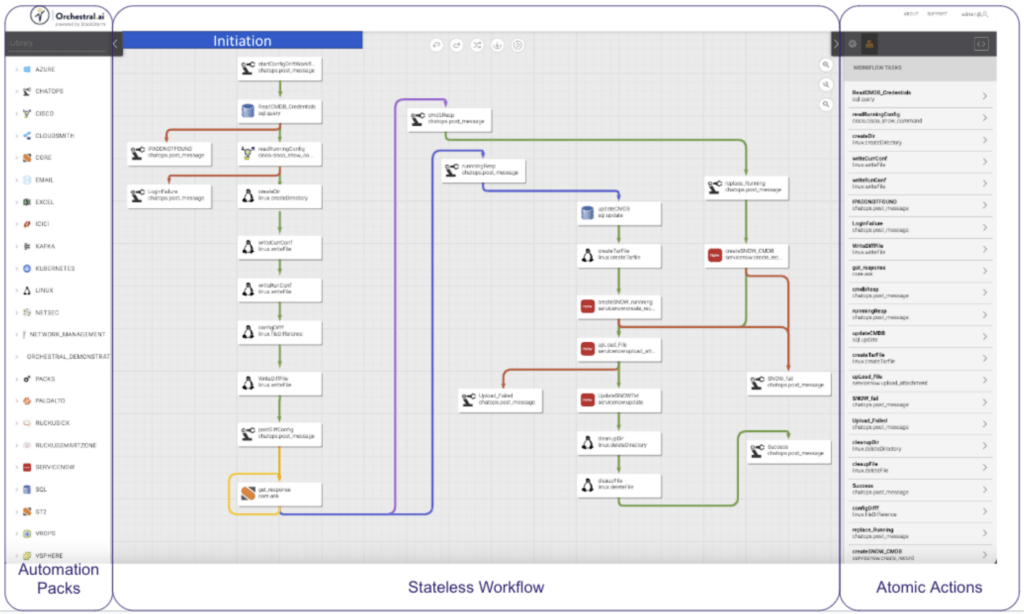

Below is the workflow development screen. From this screen, you can see the available API packs, the workflow, and the atomic actions that make up the workflow.

Figure 2 – Orchestral Workflow Overview

The Stateless workflow is shown in a flowchart format describing the events’ sequence. This sequence of events would be the same for any environment.

The Automation Packs highlight the library of connections made available to the workflow.

Operation Data provides the details for the specific deployment. These can include:

Each workflow element is stateless without the underlying data elements that support it. Composer builds and maintains a data library as it conducts the orchestration associated with each workflow. Each atomic action can access or update the data in the data library, allowing the data to be passed down the workflow.

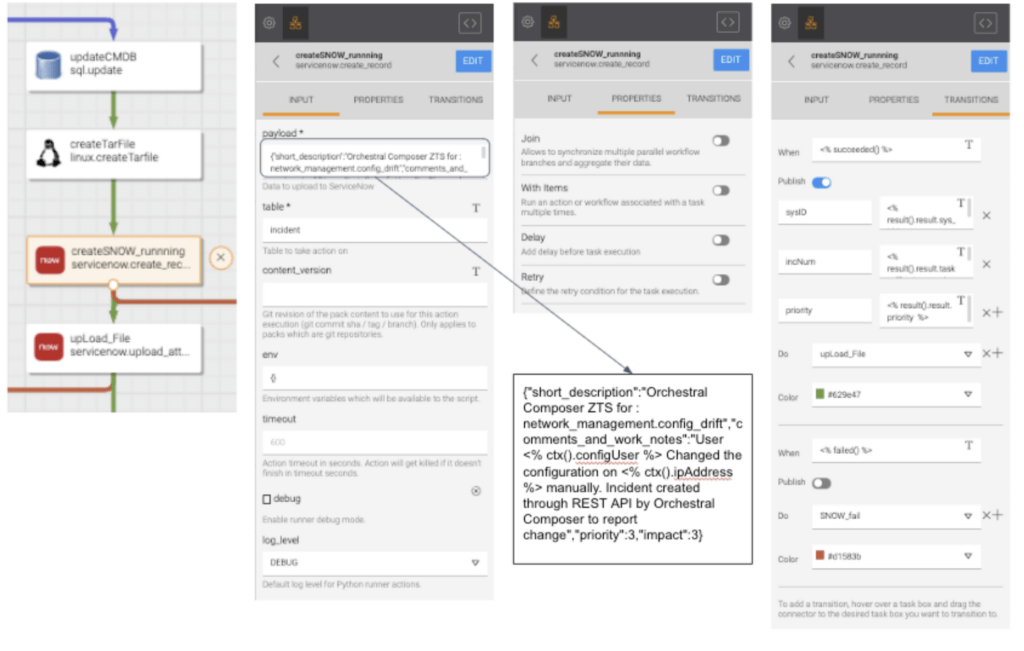

Let’s look at the details for one of the actions, createSNOW_running. This action is called after the user has indicated that the running configuration should be kept and the CMDB record for the device should be updated to reflect the running configuration. The action takes the data gathered from the device and creates a ServiceNow ticket indicating that the configuration’s CMDB has been updated. Composer makes the API calls to ServiceNow without the user knowing about the API structure.

In the Input to the section for the action, the payload provides the details of the data to upload into ServiceNow using the APIs of the SericeNow pack. For example, <% ctx().configUser %> indicates the ServiceNow user logging the ticket and <% ctx().ipAddress %> the IP address of the device.

Users can also provide additional properties that define actions, like synchronizing the action with other actions, delaying the action, and even retrying the action should it fail. The final tab provides transition options for the actions. In this example, for when the action succeeds or if it were to fail.

Figure 3 – Detailed view of the data supporting the createSNOW_running atomic action

The stateless nature of the workflows allows them to be used as templates for other environments where the specific data of that environment would give the orchestration state.

The digital nature of information technology means that data is at the core of everything. The specific actions, system inventory, communications, and governing policies are codified as data sets. The atomic actions perform data manipulation activities against the data set. So, automation and orchestration of IT operations become a data management problem. And this approach is different from the action engines often used today.

Orchestral’s Symphony Platform with Composer is a leading orchestration platform built on data management principles. Workflows are stateless series of atomic actions, operational data is cataloged and accessible by each atomic action, and the API Packs provide the communications to systems. This approach allows portability, repeatability, and consistency in actions regardless of vendors of the underlying technologies.

Learn more at: Orchestral.ai